Help-AI

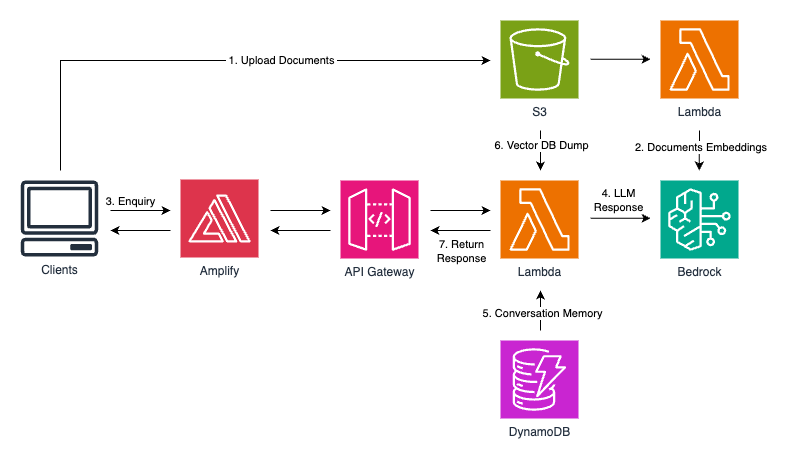

Help-AI is a cutting-edge solution aimed at embedding a smart AI-driven chatbox into any website. The chatbox is capable of understanding and responding to user queries by referencing context provided in PDF documents stored in AWS S3. This approach ensures that the chatbox can provide precise and relevant information to users.

Key Features

- AI-Powered Responses: Utilizing Claude-v2 for natural language processing and understanding.

- PDF Contextualization: Admins may upload PDF documents to AWS S3, which the AI uses to derive answers.

- Seamless Integration: The chatbox can be embedded into any website.

- Responsive Design: Built with React and styled using Tailwind CSS for a sleek, responsive interface.

- Scalable and Secure: Leveraging AWS infrastructure for robust performance and security.

Technology Stack

- AWS Services: Serverless architecture.

- Amazon Bedrock for serverless embedding and inference.

- LangChain to orchestrate a Q&A LLM chain.

- Amazon DynamoDB for serverless conversational memory.

- AWS Lambda for serverless compute.

- Amplify for hosting.

- Git: For version control.

- Python: Backend scripting and data processing.

- React: Frontend framework for building the chatbox interface.

- Tailwind CSS: For styling the user interface.

Prompt Template

To ensure the AI can provide accurate responses, a prompt template is crafted. Here’s an example of the template used to query the AI:

prompt_template = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant specialized in providing information from a user manual for a complaint system."),

("system", "You will respond only in English or Bahasa Melayu."),

("system", "If a question is asked in English, answer in English."),

("system", "If a question is asked in Bahasa Melayu or Bahasa Indonesia, answer in Bahasa Melayu."),

("system", "You will provide answers based on the context provided from the user manual."),

("system", "You will not apologize ('sorry' or 'maaf') in your responses."),

("system", "You will not include unnecessary information in your answers."),

("system", "You will respond in the simplest form possible."),

("system", "If a question is outside the context of the user manual, inform the user to ask based on the context provided in the simplest manner."),

("system", "Maintain a polite and professional tone in all responses."),

("system", "If a query is unclear, ask the user for clarification."),

("system", "Use examples from the user manual when appropriate to illustrate points."),

("system", "If a user repeats a query or asks a follow-up question, provide consistent information."),

("system", "If the user manual content is missing or incomplete, inform the user that the information is not available."),

("user", "Please provide information on {topic}")

])

Disclaimer: This project was originally developed by Pascal Vogel for AWS Samples repository. This is just an experiment project with prompt engineering and the exploration of AWS Bedrock and LLMs technologies. Some modification also have been made to simplify the architecture. Original repository can be found here.